Canvas is a care modeling platform designed to help clinicians deliver good medicine. In our efforts to support increasingly advanced care models, we focused on correct informatics, platform completeness and extensibility — but we didn’t pay enough attention to application performance and as a result latency slipped in certain scenarios. We were reminded it doesn't matter how smart a system aspires to be if it isn't reliably fast across users and patients, every single day, for every customer.

Last quarter we applied significant resources to address latency issues in our software. It was not a straight path to victory but the end result speaks for itself: we were able to bring 99th percentile UI latency down 40% overall and a whopping 60% for the subset of customers who were most affected. Here is a time series plot of actual 99th percentile ("p99") UI latency data for one such customer. You can see the performance improvement after our release on June 8th:

In this post I will share details on how we investigated the issues and the steps we took to accomplish this result. We hope it may be useful for teams maintaining large React applications that serve users in widely varying and highly demanding conditions.

How we determined the problems

We knew we had performance problems from several different sources. Our own monitoring and analytics showed some of the issues: slow back-end API performance and some front-end workflows took too long. The feedback from our customers, however, painted a more urgent picture: slow page loads and even UI lockups of many seconds.

Some of these issues had simple causes. An initial performance spike identified the back-end cause of much of the time spent loading a patient’s chart. Optimizing queries and adding additional indexes fixed many of the back-end API issues.

Reproducing some of the worst UI latency issues, however, initially vexed us. The issues were not dependent on number of concurrent users, patient volume, total data volume, or other dimensions of scale. They were dependent on the particular customizations and complex workflows of the specific customers experiencing them. Variation was wide, with about 5% of customers affected by p99 UI latency over 8 seconds, and 95% of customers with typical p99 UI latency under 2 seconds, though a bit spiky, as you can see in the side-by-side plots below:

To investigate deeper particularities, we asked for and received permission to clone customer data for critical performance investigation. Then we got to work and found several additional sources of front-end performance degradation.

We initially hypothesized the long pauses our customers reported were due to Chrome’s garbage collector running and blocking the UI. This led us to look at our front-end memory consumption as a whole and to gain expertise in parts of the web platform like COOP and COEP, which are required to use measureUserAgentSpecificMemory, a way to see how much memory the current tab is using. Using this API allowed us to disprove the garbage collection hypothesis and investigate other areas.

To gain a better view into exactly what was happening in the browser we added instrumentation based on the new PerformanceObserver recently added to modern browsers. We sampled a percentage of all interactions that exceed 16ms (approximately 1 frame’s duration at 60 frames per second) and all tasks that block the UI for more than 50ms (this minimum is set by the PerformanceObserver and is what Chrome defines as a "long task"). Our goal was to move the average and the 99th percentile of these long tasks and event timings drastically downward.

Once we had this monitoring in place we began to use tools like why-did-you-render, the Chrome CPU and heap profiler, the React profiler, the Redux dev tools, and some additional custom tools developed specifically for this investigation.

What we found

In broad strokes, here’s what our investigation revealed.

Heavy reliance on global state

Much of our front-end state is stored in a global Redux store. This makes it easy for components on the page to access the same data, but it comes with a heavy cost: any component subscribed to the Redux store needs to do work to determine whether it needs to re-render every time the store is updated. When we started building Canvas this was the conventional wisdom for React/Redux applications but as time has gone by and hard-won performance battles have been fought it’s become clear that limiting the data stored in global state and the number of times it is updated leads to better performance.

Updating the global state too frequently

We exacerbated our problem with too many state subscriptions by updating our state on every keypress and mouse click due to our reliance on a library called redux-form. Redux-form makes working with form inputs easy, but it stores the in-progress form in the global Redux state and updates that state on every keypress, causing every subscribed component on the page to either re-render or do the work necessary to determine if it needed to re-render. This is the root cause of the “typing latency” some of our customers experienced.

Using lodash helpers in selectors

Many of our Redux selectors–small functions responsible for selecting subtrees of the global state–were built using combinations of the lodash pipe, compose, get, getOr, and has methods. When this code was written lodash was a helpful addition because it gracefully handled the case where a deep attribute within the state didn’t exist.

Unnecessary work

Much of the performance issues fell into the category of unnecessary work. Some components took selections from the state in the form of objects or functions, which would never be equal when taken from the state and compared using strict equality (the default for Redux).

How we addressed the problems

Here's how we approached solutions, given the urgency and our longer term roadmap.

Heavy reliance on global state & updating the global state too frequently

With so many components on the stage connected to the global state, and so many small granular state updates happening during regular use of the application, it made sense to address both issues. We’re in the process of entirely moving redux-form so that form updates do not also cause global state updates. Redux-form is an especially bad case because every keystroke causes a global state update and all connected components (thousands in our case) then have to check if they need to update. For too long our ethos was “everything should live in the global state.” We now have nearly the opposite ethos: “only shared state should be global, everything else should live with the component.” This drastically reduces the number of connected components and global state updates to a level that is performant.

Using lodash helpers in selectors

Now that JavaScript has optional chaining many of our uses of lodash are no longer needed. Rewriting our hundreds of selectors in vanilla TypeScript yielded big wins in performance (as well as improvements in readability of our CPU profiles). It can’t be overstated how much of an improvement the readability of the flame graphs improved without the use of lodash helper methods everywhere–this unblocked further performance improvements as we were better able to understand where time was being spent in the application.

Unnecessary work

Adding memoization helped remove unnecessary work, as did changing how we compared equality. We moved from lodash’ isEqual to fast-equals’ circularDeepEqual which is much, much faster.

The Results

We were able to obliterate the long tail of experienced latency from our application, dropping 99th percentile UI latency by 40% overall and 60% for the subset of customers who were most affected.

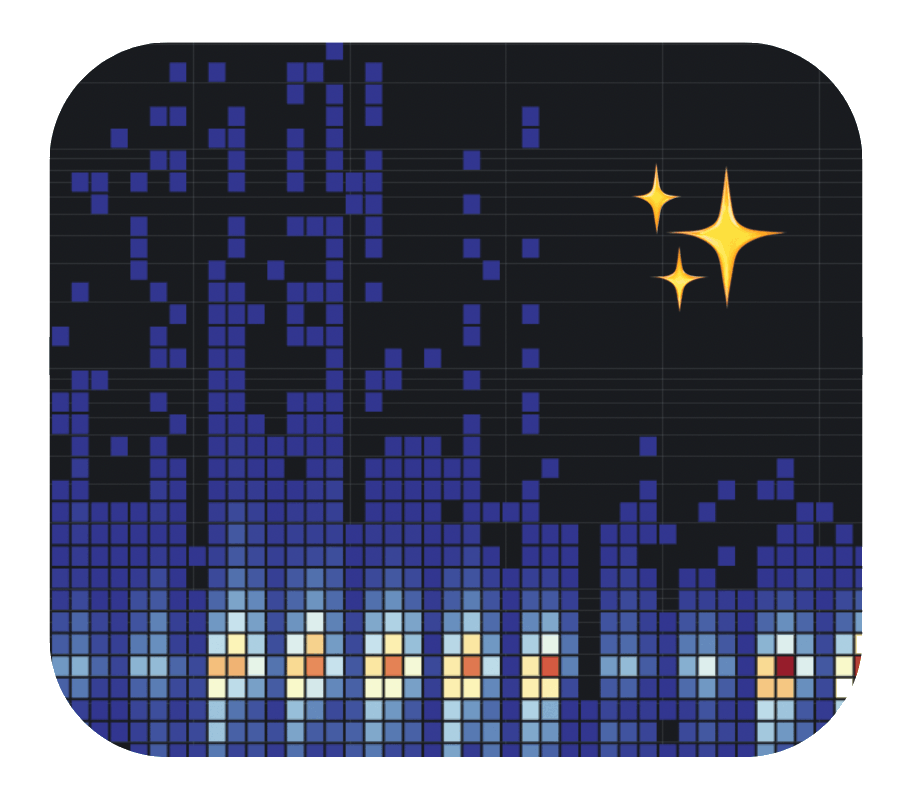

For a bit more resolution on one of the worst-offender keypress events contributing to the higher-level UI latency metric, take a look at the heatmap below. It is a time series of keypress duration histograms on log scale. Before June 8th you can see the long tail as blue "flames" shooting up to 10 seconds or more. After the 8th, you can see the flames stop around 200 milliseconds — a 50X improvement!

There’s still more work to be done, but the tools and techniques we implemented for this work will be invaluable for ensuring that we never slide backwards as far as performance is concerned. The new latency metrics allow us to alert based on negative changes and we can trace those changes back to a specific change in our codebase.

What's Next

We have much still in the pipeline at Canvas that will continue to drive performance alongside furthering our commitments to correct informatics, platform completeness, and extensibility. One of the key initiatives still underway is to integrate synthetic performance metrics into our CI pipeline so we can protect against future degradations in latency. We will also be sharing a public dashboard with transparent performance KPIs so you can follow along as we continue driving down latency.

If you are a software engineer reading this and have interest in solving important engineering problems in the name of good medicine, please check out our careers page or simply reach out. We are hiring!

If you are a care delivery organization that needs a platform that doesn't force you to adapt your care model to the constraints of the system, we would love for you to try Canvas out. We would be happy to set you up with a sandbox and walk you through how clinicians × (software + developers) = better care.